You may recall that I wrote with great enthusiasm about the wonders of digit span, describing it as a modest bombshell. It is a true measure: every additional digit you can remember is of equal unit size to the next, a scaled score with a true zero. Few psychometric measures have that property (a ratio scale level of measurement in SS Steven’s terms), so the results are particularly informative about real abilities, not just abilities in relation to the rubber ruler of normative samples. If there are any differences, including group differences, on such a basic test, then it is likely they are real.

http://drjamesthompson.blogspot.co.uk/2014/03/digit-span-modest-little-bombshell.html

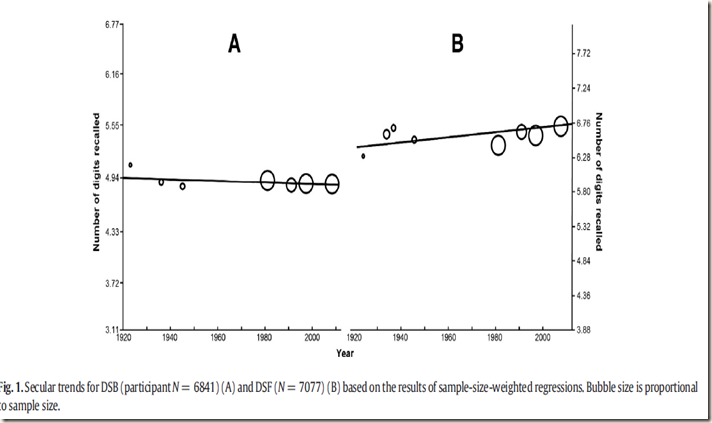

Then last November Gilles Gignac dropped another bombshell. He found that if you looked at total digit span scores since 1923 there was not a glimmer of improvement in this very basic ability. This cast enormous doubt on the Flynn effect being a real effect, rather than an artefact of re-standardisation procedures. Gignac noted that digits forwards and backwards were in opposite directions, but not significantly so.

http://drjamesthompson.blogspot.co.uk/2014/11/have-backward-digits-sunk-flynn.html

Later, I darkly suggested that another paper was in the offing, taking a different view of these data. Picture my pleasure when a barefoot messenger boy arrived at my humble cottage bringing a parchment missive with the unmistakeable seal of Woodley of Menie, the young inheritor of the great estate further North.

Young Woodley tells me that Gignac’s “substantial and impressive body of normative data on historical means of various measures of digit span covering the period from 1923 to 2008” reveals a hidden finding: the not-very-g-loaded digits forwards scores have gone up and the g-loaded digits backwards scores have gone down. This suggests that the fluffy forwards repetition task has benefitted from secular environmental gains, while the harder reversal task reveals the inner rotting core of a dysgenic society. Perturbed at these findings, I made sure that the messenger boy was kept outside, away from the under-maid, while I penned my reply.

Michael A.Woodley of Menie and Heitor B.F. Fernandes (2015) Do opposing secular trends on backwards and forwards digit span evidence the co-occurrence model? A comment on Gignac (2015). Intelligence 50 (2015) 125–130

https://drive.google.com/file/d/0B3c4TxciNeJZSzlJT2tVZUVnZjA/view?usp=sharing

Woodley and Fernandes opine: Gignac's (2015) observations are important as they add to the list of rarely considered cognitive measures the secular trends for which seem to defy the Flynn effect. These include inspection time, which, like digit span, seems to show no secular trends (Nettelbeck & Wilson, 2004), simple visual reaction time, which seems to show quite pronounced secular declines (Silverman, 2010; Woodley, Madison, & Charlton, 2014a; Woodley of Menie, te Nijenhuis, & Murphy, 2015; Woodley, te Nijenhuis, & Murphy, 2013b, 2014b) and also society-level indicators of cognitive capacity, such as per capita rates of macro-innovation and genius, which also indicate pronounced declines starting in the 19th century (Huebner, 2005; Murray, 2003; Simonton, 2013; Woodley, 2012; Woodley & Figueredo, 2013).

Woodley has proposed a co-occurrence model: losses in national IQ brought about by the higher fertility of the less intelligent exist at the same time as IQ gains brought about by more favourable social environments. “A rising tide raises all boats, but some are leaky”. More specifically, the dysgenic loss should be concentrated on g as dysgenic fertility and mutation load are biggest on more g-loaded subtests (Peach, Lyerly, & Reeve, 2014; Prokosch, Yeo & Miller, 2005; Woodley & Meisenberg, 2013a). Secular environmental IQ gains (i.e. Flynn effects) are concentrated on ability specific sources of performance variance (te Nijenhuis & van der Flier, 2013; Woodley, te Nijenhuis, Must, & Must, 2014c), thus narrow abilities should be increasing in parallel. Key to this is the observation that heritabilities rise with increasing g loading (Kan,Wicherts,Dolan,& van derMaas, 2013; Rushton & Jensen, 2010; te Nijenhuis, Kura, & Hur, 2014). Heritability may therefore mediate the effect of both dysgenic selection and related factors, such as mutation accumulation, and environmental improvements on IQ scores, concentrating the effects of the former on g and the latter on the specific variances of cognitive abilities.

Woodley and Fernandes have done a re-analysis of the forwards and backwards digit scores, using sample-size-weighted least squares regression. As befits his aristocratic origins, Woodley does not deign to make it clear what A and B stand for. In my role as interlocutor I can tell you that, for no particular reason, A = Backwards digits and B = Forwards digits. If only I had been consulted! Airliners have crashed due to such poor labelling, and operating table errors due to these baffling descriptions don’t bear thinking about.

In summary, digits forwards have increased somewhat, digits backwards have decreased. Woodley appears to have got another indicator of mild dysgenic trends.

Selecting the best scrap of paper I could find in the oaken desk, I acknowledged the kind communication of this intriguing finding, and bade Woodley of Menie a Happy Easter. I bestowed the same valediction on the messenger boy, plus the customary shilling, and sadly without the shilling, wish you the same.

Sir, it is indeed gratifying to know that you and the good doctor Woodley endorse my belief in the reversed digit span as an essential component of a well-balanced IQ test: http://awesomescience.us/a-better-iq-test

ReplyDeleteMark, thank you for linking me to your informative blog. It is always good when a physicist gets interested in psychology (Dick Feynman did). As far as I know there are few instances of psychologists breaking into Physics. If you come across any, let me know.

DeleteYou're very welcome, James! Unfortunately, I think physics is difficult even for a physicist to break into. This is part of what I love about the soft sciences - they're still in their golden age, when low hanging fruit is ripe for the picking. To make breakthroughs in physics, one either needs billions of dollars in laboratory equipment, or else a focus so narrow and sophisticated that most laypeople won't understand one's research at all.

Delete"I made sure that the messenger boy was kept outside, away from the under-maid": aha, the lass who occupies your second kitchen.

ReplyDeleteNo kitchens here. Sculleries and larders!

DeleteI'm struck by the fact that the plots start from 1920 or so. In Britain (and there are perhaps analogies elsewhere) men would have become more used to memorising long numbers (forwards) by virtue of memorising their army numbers, national insurance numbers, and whatnot: in other words, it might be a consequence of the rise of the bureaucratic state. I wonder whether women showed a lesser drift upwards?

ReplyDeleteOh yes, and add longer telephone numbers.

DeleteOn a quick search the first reference I can find is Humstone, J.(1919). Memory Span Tests. Psychol. Clin., 12, 196-200, so I think that it is merely that at that stage memory span testing took on the digit span form. However, learning long numbers probably followed the rise in use of the telephone, as you suggest.

DeleteDigit span backwards looks pretty dang constant to me, especially when you factor in random sampling error from the earlier smaller samples.

ReplyDeleteJayman, it is not a big effect, but there's something there. Make some allowances for data archaeology

DeleteHas anyone reproduced Woodley's analysis here? I can't figure out why he's putting out this paper; whenever I try meta-analyzing Gignac's dataset the obvious way (fixed-effects meta-analysis weighted by N & SD, Year as moderator variable), the year variable isn't even *remotely* statistically-significant (nor are the backwards digit spans heterogeneous in the first place), but Woodley's paper claims 95% CIs which exclude 0...

DeleteMy data & R code: http://pastebin.com/L2MDM1WR

Or inline:

# "DST = Digit Span Total; W-B = Wechsler-Bellevue; WMS = Wechsler Memory Scale; WAIS = Wechsler Adult Intelligence Scale; MAS = Memory Assessment Scales; NA = not available."

# standard deviations split out as separate columns

digit <- read.csv(stdin(),header=TRUE)

Source,Year,N,Ages,LDSF,LDSF.SD,LDSB,LDSB.SD,DST

Wells and Martin,1923,50,Adults,6.3,NA,5.1,NA,11.40

Wechsler,1933,236,Adults,6.60,1.13,NA,NA,NA

Weisenburg et al,1936,70,18-59,6.69,1.02,4.87,1.16,11.56

W-B,1939,1081,17-70,NA,NA,NA,NA,12

WMS,1945,96,20-49,6.53,1.17,4.80,1.12,11.23

WAIS,1955,1785,16-75,NA,NA,NA,NA,11

WAIS-R,1981,1880,16-74,6.45,1.33,4.87,1.43,11.32

MAS,1991,845,18-90,6.63,1.22,4.83,1.30,11.46

WAIS-III,1997,2000,16-74,6.59,1.35,4.85,1.49,11.44

WAIS-IV,2008,1900,16-74,6.72,1.31,4.84,1.39,11.4

# 'Wells and Martin 1923' is missing SDs; following Woodley, impute an SD:

# "...1.49 for DSB and 1.35 for DSF from the 1997 standardization of the WAIS- III, N = 2000, Wechsler, 1997"

digit[is.na(digit$LDSB.SD),]$LDSB.SD <- 1.49

digit[is.na(digit$LDSF.SD),]$LDSF.SD <- 1.35

library(metafor)

fe1 <- rma(measure="MN", method="FE", yi=LDSB, vi=LDSB.SD, ni=N, mods=Year, data = digit); fe1

# Fixed-Effects with Moderators Model (k = 7)

# Test for Residual Heterogeneity:

# QE(df = 5) = 0.0307, p-val = 1.0000

#

# Test of Moderators (coefficient(s) 2):

# QM(df = 1) = 0.0112, p-val = 0.9157

#

# Model Results:

#

# estimate se zval pval ci.lb ci.ub

# intrcpt 7.8354 27.9588 0.2802 0.7793 -46.9629 62.6337

# mods -0.0015 0.0142 -0.1059 0.9157 -0.0294 0.0263

plot(fe1)

# https://i.imgur.com/P7rMfNQ.png

[First part]

DeleteIn computing significance and 95% CI’s for the results of N weighted regression you use the combined N of participants - this is because weighting by N simulates the results that would be obtained in performing a secondary analysis of the pooled raw data. Computing an N-weighted correlation is equivalent to simply duplicating each observation by the number of participants per observation. So, correlating (simulated) paired values of 1930, 0.3 (N=4), 1940, 0.2 (N=5), and 1960, 0.7 (N=6) is equivalent to correlating the following columns:

X Y

1930, 0.2

1930, 0.2

1930, 0.2

1930, 0.2

1940, 0.3

1940, 0.3

1940, 0.3

1940, 0.3

1940, 0.3

1960, 0.7

1960, 0.7

1960, 0.7

1960, 0.7

1960, 0.7

1960, 0.7

The degrees of freedom in the above are not 3-2 (the number of observations), but 15-2 (the sum of the weights). With a combined N of several thousand, the results of most N-weighted regressions will be statistically significant. Some analytical software (e.g. certain versions of SPSS and Statistica) will calculate the correct significances and CIs automatically, some do not however, and require that these parameters be manually computed instead.

There are some errors in the commenter’s replication attempt. I assume that he is attempting to weight by standard error of the mean (henceforth SE), in which case there are two ways of doing this. Firstly, he can use sample-specific standard deviation values, which necessitates that he impute the missing standard deviation value for the Wells and Martin datapoint. For this he should not use the SD value for the largest sample, but should instead use the correlation between SD and N to impute an SD value for the missing case based on its sample size (50). The correlations for both backwards and forwards digits are virtually perfect (r=.97 and .93 respectively), therefore he would assign the values of 1.15 (SE=.16, i.e. 1.15/√50) and 1.10 (SE=.16) to the backwards and forwards digit span means from Wells and Martin.

[Second part]

DeleteAlternatively, he could assign to each mean a fixed standard deviation value, using the largest value (i.e. the one that is least attenuated by sampling error; 1.35 for digits backwards and 1.49 for digits forwards), which is meta-analytically sound as it is a synthetic correction for sampling error.

The commenter has a good point - weighting by SE is very often useful, especially in meta-analyses where different indicators of the same construct, scaled in different metrics, are being compared (i.e. GRE and SAT scores), thus making them comparable. In the present case however the digit span measures are scaled equivalently, therefore weighting by SE is not necessary and simple N weighting is therefore appropriate.

My co-author and I are fully aware of just how arbitrary significance is in the case of N weighted regression. In point of fact, in the first version of the paper, my co-author and I did not even report significance. We actually ended up doing to so on the suggestion of an expert on meta-analysis.

The commenter will notice also that we don’t make a big deal out of the significance of the results. Instead, we explicitly state that more attention should be paid to the following:

i) The nomological validity of a given result (i.e. the degree to which it accords with theory and with the pattern of other results).

ii) The robustness of the particular result (i.e. the degree to which it withstands the application of alternative and more rigorous statistical procedures).

iii) The magnitude of the result (modular coefficients of >.5 are considered to be large magnitude by many polytomous effect size division schemata).

We are simply building on Gignac's findings and incorporating them into the broader theoretical framework of the Flynn effect, of which the co-occurrence model is now an established part.

@JayMan

DeleteN weighted regression deals with the problem of sampling error by assigning larger weighting terms to samples with bigger values of N (and commensurately lower sampling error), thus bringing out the "true" relationship amongst the variables. The trends presented in the paper are not being driven by the smaller samples, but by the bigger ones.

To understand this issue better, I suggest reading Chapter 3, specifically pages 95-96 in Schmidt and Hunter (2014).

Ref.

Schmidt, F. L., & Hunter, J. E. (2015). Methods of meta-analysis. Correcting error and bias in research findings (3rd ed.). Thousand Oaks: Sage.

> With a combined N of several thousand, the results of most N-weighted regressions will be statistically significant.

DeleteIn this case, it seems it was not. As one would expect on a time-series where the most distant (and hence, most informative) datapoints are tiny like n=50.

> For this he should not use the SD value for the largest sample, but should instead use the correlation between SD and N to impute an SD value for the missing case based on its sample size (50).

I don't follow. The argument in your paper was that the SD of the large samples, with n=thousands and hence minuscule sampling-error, can be considered to be equivalent to the true population SD; given this assumption, then in smaller draws from that population, such as n=50, the best estimate of the unknown sample SD will be the same as the population SD, no? I don't understand this business about correlations.

> In the present case however the digit span measures are scaled equivalently, therefore weighting by SE is not necessary and simple N weighting is therefore appropriate.

It may be a simplification to drop the standard deviations, but it is useful information about how large population variability is, and so I am loathe to omit it.

> ii) The robustness of the particular result (i.e. the degree to which it withstands the application of alternative and more rigorous statistical procedures).

So, you're writing a paper about an estimated slope which is:

- not remotely statistically-significant evidence for it being non-zero, and almost zero Bayesian evidence for non-zero values

- on a set of data which has no estimated heterogeneity beyond sampling error

- which, for the point-estimate to mean anything at all, requires that one pretend sampling error is not driving the estimate, that the measured populations are identical, that the change reflect g-related changes in the subjects rather than the large variance due to all other factors

- while claiming that the *other* variable's changes are driven by non-g factors in the subjects

I am not sure why one would want to write a paper on implications derived from noise and a tower of questionable assumptions.

> The trends presented in the paper are not being driven by the smaller samples, but by the bigger ones.

That is impossible. The early samples are also the smallest; estimates of a linear trend are dominated by the earliest and latest datapoints, because the predicted difference from the intercept is maximized at them, hence, the possible temporal trend is thanks in part to the smaller/earlier samples. (This is well understood for the theory of optimal experiment design, where one maximizes the power of experiments intended to detect a linear trend by allocating samples to the 2 extrema, and spending no samples on any intermediate points. See for example "Optimal Design in Psychological Research", McClelland 1997, pg4, which discusses the most efficient design for linear effects.) And we can see how much the early data points matter by simply dropping the *first* study and noting that the sign becomes *positive*:

R> fe2 <- rma(measure="MN", method="FE", yi=LDSB, vi=LDSB.SD, ni=N, mods=Year, data = digit[-1,]); fe2

...

mods 0.0001 0.0171 0.0038 0.9969 -0.0334 0.0335

In other words, this supposed decline in backwards digit span is due *solely and completely* due to the one small early Wells & Martin 1923 estimate of 5.10 (n=50) compared to all the other estimates of ~4.8; without Wells & Martin, we would instead estimate a raw rise of 0.001 digits per decade. That is, the trend is being driven by not just the smaller samples, but by the smallest sample.

Comment 1: I don't follow. The argument in your paper was that the SD of the large samples, with n=thousands and hence minuscule sampling-error, can be considered to be equivalent to the true population SD; given this assumption, then in smaller draws from that population, such as n=50, the best estimate of the unknown sample SD will be the same as the population SD, no? I don't understand this business about correlations.

DeleteResponse 1: If you want to weight by standard error of the mean you either use sample-specific standard deviations, or you fix the parameters using the largest standard deviation value as the reference value. You can choose one or the other, but not both. You are using the sample-specific standard deviations for each study where these data are available, but you then go and assign the largest value to the smallest study! If you are going to use the largest value as a reference value you apply it to all SE calculations, not just to one data point, as all data points with N’s smaller than the reference population are going to suffer from some degree of sampling error.

Comment 2: So, you're writing a paper about an estimated slope which is:

not remotely statistically-significant evidence for it being non-zero, and almost zero Bayesian evidence for non-zero values - on a set of data which has no estimated heterogeneity beyond sampling error which, for the point-estimate to mean anything at all, requires that one pretend sampling error is not driving the estimate, that the measured populations are identical, that the change reflect g-related changes in the subjects rather than the large variance due to all other factors

Response 2: False - the slope is significant at <.0001. How could a Beta value of -.55 with df=6839 NOT be significant?

On Bayesian reasoning, and its proper role in interpreting the current finding, I suggest you brush up on the concept of nomological validity (start with Cronbach & Meehl, 1955, Psychol. Bull, 52, 218). There is now a large nomological network of supporting evidences for the co-occurrence model. The fact that the trends detected in the present paper are consistent with expectations from the model and with the behavior of other elements in the co-occurrence nomological network increases the nomological validity of this finding – in addition to the prior probability of this latest result indicating something rather than nothing.

Comment 3: while claiming that the *other* variable's changes are driven by non-g factors in the subjects

DeleteResponse 3: Find some solid evidence that Flynn effects are occurring on g and I’ll update my priors accordingly.

Comment 4: In other words, this supposed decline in backwards digit span is due *solely and completely* due to the one small early Wells & Martin 1923 estimate of 5.10 (n=50) compared to all the other estimates of ~4.8; without Wells & Martin, we would instead estimate a raw rise of 0.001 digits per decade. That is, the trend is being driven by not just the smaller samples, but by the smallest sample.

Response 4: False – excluding the 1923 sample yields an N-weighted β of -.41 (df=6789, p<.01). But why stop with excluding the 1923 data point? If you are going to do a robustness analysis of this sort, you really should employ proper exclusion rules. Why not exclude all of the smaller, older studies (N<100)? When this is done the strength of trend with respect the remaining large-sample cases is clear (β=-.74, df=6623, p<.01). What happens if we just look at the smaller, older studies separately? We get another strong indication of a trend (β =-.98, df=214, p<.01). Thus the overall trend is not being driven by an outlying value, but comprises two directionally consistent secular trends, the earlier of which has an exaggerated slope relative to the later one. The stronger slope across the earlier samples could well be due to sampling error. Simply regressing all of the sample scores against time, weighting each by sample size is therefore a perfectly sound way of deriving a valid point estimate of the overall temporal trend.

Comment 5: I am not sure why one would want to write a paper on implications derived from noise and a tower of questionable assumptions.

Response 5: Then you are not going to like what is about to hit the pages of Frontiers in Psychology. The co-occurrence model has now been directly and exhaustively tested and validated. My priors have never looked so good!

> Response 1: If you want to weight by standard error of the mean you either use sample-specific standard deviations, or you fix the parameters using the largest standard deviation value as the reference value. You can choose one or the other, but not both. You are using the sample-specific standard deviations for each study where these data are available, but you then go and assign the largest value to the smallest study! If you are going to use the largest value as a reference value you apply it to all SE calculations, not just to one data point, as all data points with N’s smaller than the reference population are going to suffer from some degree of sampling error.

DeleteThis seems to be ignoring my point by equiocating on standard error when the question is about the standard deviation. If I do not know a parameter like a mean in a small sample, but I know the parameter exactly in the population, the best unbiased estimator of the sample's mean is going to be the population mean (possibly with a small-sample correction); of course sampling error means the sample's actual mean could be smaller or larger, but it will vary around the population mean, since the sample is drawn from said population. The standard deviation will not change; if I sample 100 people from a population with a true standard deviation of 10, then the sample standard deviations will dance around 10; if I sample 1000 people, then the sample standard deviation will be somewhere around... 10; if I sample a million people, the sample standard deviation will be somewhere around... 10. Because the generating population standard deviation is 10, so of course any sample will be yoked to that. If you run some simulations on a normal distribution comparing the mean of a bunch of sample SDs for various n with the true SD, they're going to look very similar. (eg something like `for (n in 2:6) { print(mean(replicate(10000, sd(rnorm(10^n, sd = 10))))) }`). If one has the SD value for a specific sample, of course it's better, but the most precisely estimated SD is the best if you're forced to impute.

Not sure this point is worth the space - if the real SD is larger like 1.49, it only means Wells & Martin 1923 (which is entirely driving the supposed decline) is even less informative than before. But moving onwards.

> Response 2: False - the slope is significant at <.0001. How could a Beta value of -.55 with df=6839 NOT be significant?

Where is this 'significant' slope? The p-value is not significant at <0.0001 because it's more like 0.99. Feel free to present code showing otherwise. As I said, I sure can't figure out how to get my meta-analysis code to spit out p<.0001 for a small decline in digit span based entirely on a n=50 sample.

> On Bayesian reasoning, and its proper role in interpreting the current finding, I suggest you brush up on the concept of nomological validity (start with Cronbach & Meehl, 1955, Psychol. Bull, 52, 218).

DeleteSo in other words, despite the fact that the data is so scanty it cannot provide any sort of meaningful update of one's priors, you believe so strongly in dysgenics that you're going to ignore the fact of sampling error and just repeat your priors. So, in less fancy terms: GIGO.

> The fact that the trends detected in the present paper are consistent with expectations from the model and with the behavior of other elements in the co-occurrence nomological network increases the nomological validity of this finding

The fact that you explain away the trends you don't like, such as the forward digit span increase, and ignore trends you have no explanation for such as the increase in backwards digit span in recent decades (what is sauce for the goose is sauce for the gander), and claim it all confirms your model, shows the inferential bankruptcy of your model. One doesn't need to read Meehl to know that (although I'm surprised that a fellow Meehl fan puts such uncritical reliance on such weak arguments; Meehl was among the most cogent critics in psychology of assumptions like linearity & normality, the homogenous of testing procedures, the constant low-grade background noise of changes in people and the 'crud factor', the questionable causal inferences derived from 'controlling', the abuse of p-values, and - particularly relevant in this context - how psychologists pretended to falsification while actually tendentiously winding their way through a series of underpowered tests of hypotheses and creating ad hoc explanations as necessary to preserve their theories).

> Find some solid evidence that Flynn effects are occurring on g and I’ll update my priors accordingly.

DeleteSo when digit spans increase, then it can be on non-g factors (which ones, you don't know, but you feel free to write it off), but when it decreases, it cannot? You want to have your cake and eat it too.

> Response 4: False – excluding the 1923 sample yields an N-weighted β of -.41 (df=6789, p<.01).

Really? I've already provided the code for my analysis using metafor where excluding 1932 does not yield a negative coefficient. Again, feel free to point out where I coded up the analysis wrong (did I mistranscribe a datapoint? misuse metafor? misinterpret a parameter?) and provide your own corrected code, instead of continued assertion.

> Why not exclude all of the smaller, older studies (N<100)?

Sure. Now you've restored the sign... but managed to halve the effect:

fe3 <- rma(measure="MN", method="FE", yi=LDSB, vi=LDSB.SD, ni=N, mods=Year, data = digit[digit$N>=100,]); fe3

...mods -0.0009 0.0606 -0.0142 0.9887 -0.1197 0.1180

> Thus the overall trend is not being driven by an outlying value, but comprises two directionally consistent secular trends, the earlier of which has an exaggerated slope relative to the later one.

When the sign reverses when you drop the smallest study, it's not consistent. Any consideration of sensitivity would find this a big problem, which is why I brought it up in the first place!

> Response 5: Then you are not going to like what is about to hit the pages of Frontiers in Psychology.

Not sure what you mean there, but I hope whatever it is is better than this.

> These are my final remarks on the matter of the digit span paper:

DeleteThis is unfortunate, as I find your responses are deeply unsatisfactory, as well as your strange reluctance to reveal anything whatsoever of your code and how you are actually calculating the numbers you claim.

> Response 1: Why are you weighting by the standard deviation?

Because the standard deviation is the relevant number for the particular datapoint being meta-analyzed, and the weight of studies is indicated by the N, both of which provide what one needs to know. Standard error is calculated from *them*, why are you precalculating it and running it through a weird correlation process? (I hate to stand on personal anecdotes here but of all the meta-analyses I've read in psychology, I can't say I've seen any where first studies' standard deviations were regressed on their Ns to yield something new; such a procedure seems to be non-standard.)

> If we are going to consider the effects of standard deviation let us at least do it properly – by factoring it into the model as a predictor on the basis that perhaps the trend is being driven by decreasing range restriction over time.

Range restriction... For data where the mean values are 4 SDs from the lower bound?

> Running an N-weighted least squares multiple regression (SPSS v.21) using year to predict backwards digit span scores, whilst controlling for study standard deviation yields a β value of -.647.

Using what SPSS code? Please provide it.

> I suggest that you update your code to reflect this meta-analytic reality.

How?

> Response 4: Contrary to your representation, Meehl always strongly advocated for the use of theory in deriving scientific predictions – that is what is being done in the paper under discussion.

He also advocated for the things I mentioned.

> Response 5: No, the decrease in one and increase in the other is explicitly predicted by the co-occurrence model.

Cite? I've read most of your earlier papers, and I do not recall any advance predictions to the effect that 'forward digit span will increase and backwards will decrease'.

> Response 6: Some code for executing N-weighted correlations in R can be found at the following url:

Irrelevant. I know perfectly well how to run weighted linear regression, and have already done so. A corr function is not a replacement for a mature meta-analysis library.

> Alternatively, just run the analysis in SPSS or Statistica, the latter will automatically compute the correct significances for you also.

I would be happy to run it in SPSS. If you would provide the code which yields the numbers and claims you have made here.

It seems that a comment made by Michael has been responded to by gwern, but it is not on screen. If any of you can retrieve that comment (some time after 6 apr 1.26 I presume) can you please copy it and post it up? I cannot find anything on my admin section of the blog, but we have had problems with comments being lost from time to time.

DeleteFrom Michael A Woodley of Menie

Delete[This is a repost of a comment dated 06/04/2014. Chronologically, it is a response to Gwern 06/04/2014, and Gwern 07/04/2014 is in turn a response to this comment]

This is my final commentary on the matter of the digit span paper.

Let’s cut to the quick.

Comment 1: This seems to be ignoring…

Response 1: Why are you weighting by the standard deviation? Normally one weights by the standard error of the mean (SD/√N), inverse of the variance (1/SD^2), or, as in the case of the study under discussion, N, if the samples are being evaluated use an equivalently scaled measure of the same construct. If we are going to consider the effects of standard deviation let us at least do it properly – by factoring it into the model as a predictor on the basis that perhaps the trend is being driven by decreasing range restriction over time. Running an N-weighted least squares multiple regression (SPSS v.21) using year to predict backwards digit span scores, whilst controlling for study standard deviation yields a β value of -.647. This is in fact larger than the N-weighted temporal correlation for this subset of the data (-.41). Note that only the seven studies reporting standard deviation values were used here. The 1923 study is not driving this trend, nor are standard deviation differentials between studies. If anything, the latter is supressing the secular trend to some extent.

Comment 2: Where is this ‘significant’ slope….

Response: 2: Once again – the correct value of N for determining the significance of the coefficients is the sum of the study N’s, as this gives you the correct degrees of freedom for N weighted analysis. I suggest that you update your code to reflect this meta-analytic reality.

Comment 3: So in other words…

Response 3: The fact that you don’t see the relevance of nomological validity reinforces my impression that you need to brush up on it.

Comment 4: The fact that you explain away…

Response 4: Contrary to your representation, Meehl always strongly advocated for the use of theory in deriving scientific predictions – that is what is being done in the paper under discussion. I do not know what you mean by “inferential bankruptcy”, but in modern philosophy of science, theory is not inferred from data but tested against it. That is precisely what we are doing in the paper under discussion.

Comment 5: So when digit spans increase…

Response 5: No, the decrease of one and increase of the other is explicitly predicted by the co-occurrence model.

Comment 6: Really? I’ve already provided the code…

Response 6: The code for executing N-weighted correlations in R can be found at the following url:

http://web.mit.edu/~r/current/arch/amd64_linux26/lib/R/library/boot/html/corr.html

Alternatively, just run the analysis in SPSS or Statistica, the latter will automatically compute the correct significances for you also.

I wonder if mobile phones have a larger storage capacity shows up on these tests/has an effect? I remember when land lines were predominant and mobiles came with stands and a slot for an extra battery-charger (One in the phone and one in the charger).

ReplyDeleteIn my memory it was a point of pride among the youth to be able to remember and recall mobile numbers of the social hierarchy. Granted they didn't look at it as a mental exercise but rather a source of status signaling. Do you think that the current high storage capacity of the mobile's contact list may show up on tests as an effect among the younger (say 1985 to 1995) millenials versus those born later?

it could be, but throughout all the telephone years up to 1966 UK cities had a 3 letter 4 number structure. Guy's Hospital was HOP7600 because hop pickers came from Borough and went hop picking in Summer. Scotland Yard was WHI1212 for Whitehall, and so on. So you just said your neighbourhood, and then four digits. Now, as you say, numbers are no longer required, but passwords are, so unless we use the same one everywhere, that should keep us just as nimble.

ReplyDeleteHas anybody attempted to see if backwards digit span in fact follows a strictly normal curve?

ReplyDeleteInsofar as it expresses a fundamental brain mechanism or process that varies according to additive genes, one would expect that it would adhere pretty strictly to a normal curve.

It would be pretty valuable to know that a measure such as backward digit span is both a measure of real abilities and also follows a strictly normal curve.

with an estimated mean of roughly 5 sd 1.5 or 2 (from memory) I doubt it is normal, more likely to have negative skew.

DeleteHello, i read your blog from time to time and i own a similar one and i was just curious if you get a lot of spam comments? If so how do you prevent it, any plugin or anything you can recommend? I get so much lately it's driving me crazy so any support is very much appreciated.

ReplyDeletegreat issues altogether, you simply gained a brand new reader. What might you recommend in regards to your post that you just made some days ago? Any sure?

ReplyDelete